Abstract

Linked Data Event Streams (LDES) is a technical standard that applies linked data principles to data streams. It allows data to be exchanged between silos sustainably and cost effectively using domain-specific ontologies for fast and slow data streams. LDES provides a base API for datasets, enabling automatic replication and synchronisation. It improves data usability and findability and allows applications to receive real-time updates. SEMIC offers support for organisations interested in implementing LDES. Anyone interested in publishing linked data in a balanced way is highly encouraged to explore this page.

Introduction

Today, the main task of data publishers is to meet the user’s needs and expectations, and the way to achieve this is by creating and maintaining multiple querying Application Programming Interfaces (APIs) for their datasets. APIs have an enabling role in the establishment of digital ecosystems and the coordination of digital interactions. However, this approach causes various drawbacks for the data publisher. First, maintaining multiple APIs online can be costly as the load generated by data consumers is often at the expense of the data publisher. And second, data publishers must ensure their APIs are always up-to-date with the latest standards and technologies, which results in a huge mandatory maintenance effort.

As new trends emerge, old APIs may become obsolete and incompatible, creating legacy issues for data consumers who may have to switch to new APIs or deal with outdated data formats and functionalities. Moreover, the existing APIs limit data reuse and innovation since their capabilities and limitations constrain data consumers. They can only create their views or indexes on top of the data using their preferred technology .

“Linked Data Event Streams as the base API to publish datasets”

On the other hand, data consumers often have to deal with multiple dataset versions or copies that need to be more consistent or synchronised. These versions or copies may have different snapshots or deltas published at other times or frequencies. Although utilising data dumps offers data consumers flexibility regarding the needed functionality, this approach is of increased complexity and has various drawbacks. For example, data consumers must track the creation and update history of each version or copy . In addition, they have to compare different versions or copies to identify data changes or conflicts. Data consumers may also encounter challenges maintaining data consistency due to outdated or incomplete versions or copies. Furthermore, the changes or updates in data are not reflected in their versions or copies.

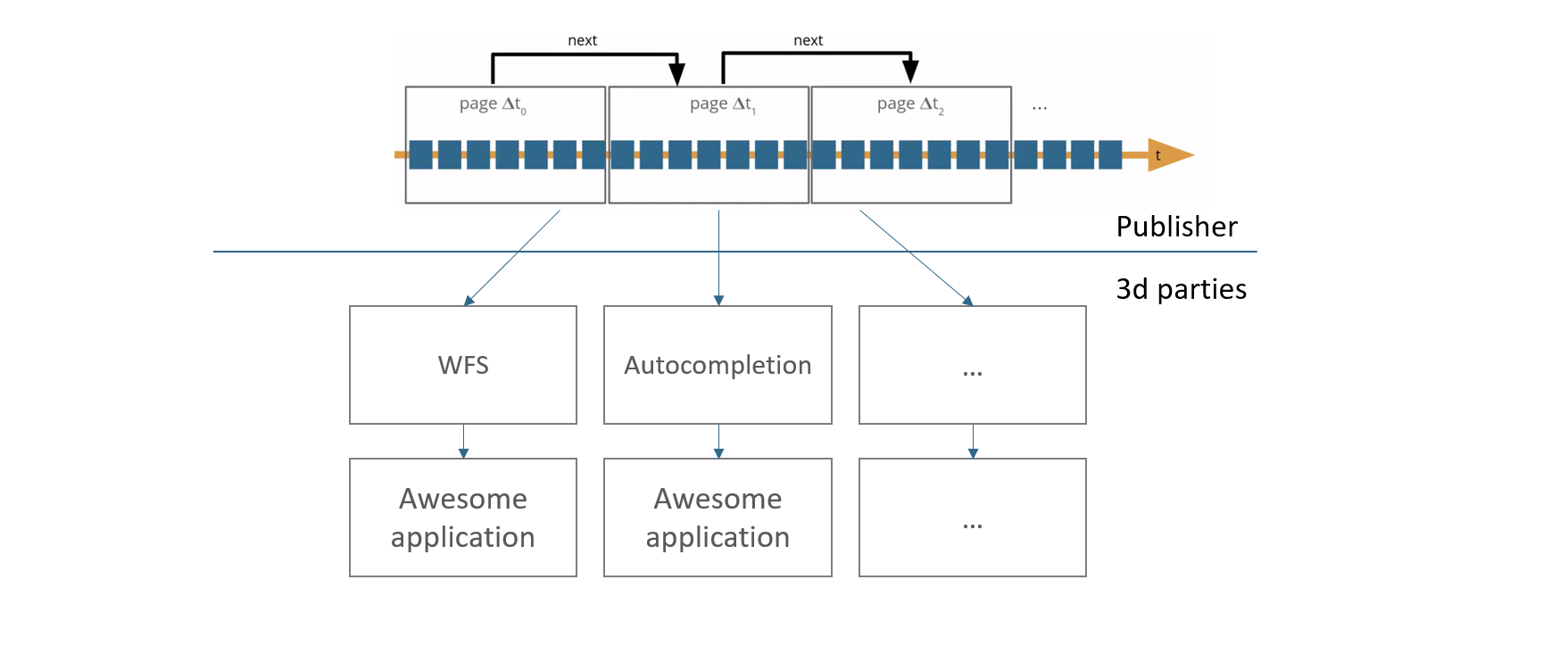

To overcome these challenges, Linked Data Event Streams (LDES) provide a generic and flexible base API for datasets. At its core a LDES can be understood as a publishing strategy by which a data provider allows multiple third parties to stay in sync with the latest version of the data source in a cost-effective manner. In that sense, LDES is a way out from the so-called "API maintenance hell", as described by Pieter Colpaert from UGent.

With LDES, data consumers can set up workflows to automatically replicate the history of a dataset and stay in sync with the latest updates.

Currently, there is an increased interest in the research community to investigate the opportunities that LDES provides starting from the basics, as described in this blog post of the Spanish Ministry of Digital Transformation.

Specification

The Linked Data Event Stream (LDES) specification (ldes:EventStream) allows data publishers to publish their dataset as an append-only collection of immutable members in its most basic form. Consumers can host one or more in-sync views on top of the default (append-only) view.

An LDES is defined as a collection of immutable objects, often referred to as LDES members.

These members are described using the Resource Description Framework (RDF) which is one of the cornerstones of Linked Data and on which LDES continues to build.

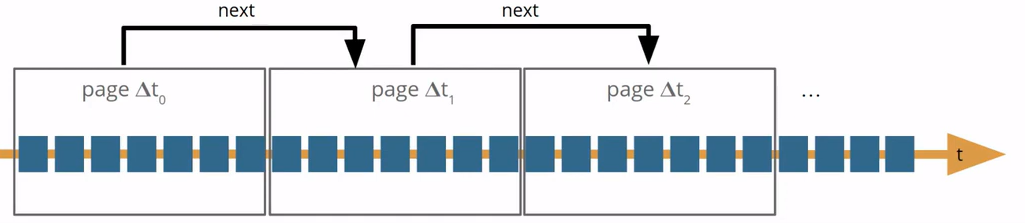

The LDES specification is based on a hypermedia specification, called the TREE specification, which stems from the concept of providing an alternative to one-dimensional HTTP pagination. It allows for fragmentation of a collection of items and interlinking of these fragments. Instead of linking to the next or previous page, the relation describes what elements can be found by following the link to another fragment. The LDES specification extends the TREE specification by stating that every item in the collection must be immutable. The TREE specification is compatible with other specifications such as activitystreams-core, VOCAB-DCAT-2, LDP, or Shape Trees. For specific compatibility rules, please refer to the TREE specification.

LDESes apply — as the term implies — the Linked Data principles to data event streams. A data stream is typically a constant flow of distinct data points, each containing information about an event or change of state that originates from a system that continuously creates data. Some examples of data streams include sensor and other IoT data, financial data, etc.

Currently, the integration of multiple data sources requires the development of custom code, resulting in significant expenses... LDES introduces a technical standard enabling the exchange of data across silos by employing domain-specific ontologies. An LDES enables third parties to build specific services (WFS, SPARQL endpoint) themselves on top of their own database that is always in sync with the original dataset.

An LDES is a constant flow of immutable objects (such as version objects of addresses, sensor observations or archived representations) containing information changes that originate from a system that continuously creates data. Compared to other event stream specifications, the LDES spec opts to publish the entire object for every change.

Furthermore, LDES increases data usability and findability as it comes in a uniform Linked Data standard published on an online URI endpoint. As a result, an LDES has a self-descriptive meaning, facilitating the discovery of additional data through linked resources.

In a nutshell, there are several compelling reasons behind the development of the Linked Data Event Streams specification.

- Linked Data is a powerful paradigm for representing and sharing data on the Web. Still, it has traditionally focused on representing static data rather than events or changes to that data.

- Event streams are becoming increasingly prevalent on the Web, as they enable applications to exchange information about data changes efficiently in real time.

- There is a need for an easy to use semantic standard that can offer a uniform way for data exchange across different systems.

- Linked Data Event Streams allow applications to subscribe to a stream of data and receive updates in real-time.

LDES Implementation - Challenges and Benefits

Linked Data Event Streams could be utilised to maintain and open up reference datasets to foster interoperability by advocating the reuse of the identifiers for which they are the authoritative source. By making data accessible via one URI endpoint, LDES makes it easy to retrieve data published as a uniform linked data standard.

Thus, LDES specification tackles two often occurring challenges while trying to stay in sync with a data source.

- On the one hand, data publishers can provide periodical data dumps or version materialisations, which users have to fully download to stay up to date with the dataset.

- On the other hand, with a querying API users can query the dataset directly without having to download the entire dataset beforehand.

Nevertheless, both data dumps and querying APIs will never fully meet the needs of their end-users. While a data dump gives a possibly outdated view of the dataset, a querying API provides its client only with a partial view of the dataset. LDES can solve these problems thanks to its URI endpoint working.

LDES Implementation Example - The Flanders Approach

To get started with the implementation of LDES, we demonstrate Flanders' (BE) approach for LDES implementation. The Flanders’ implementation is divided into both LDES CLIENT, for replication and synchronisation, and LDES SERVER for publishing one or multiple Linked Data Event Stream(s), as described in the following sections.

LDES CLIENT

The LDES CLIENT is designed for replication and synchronisation, meaning the client can retrieve members of an LDES but also checks regularly if new members are added and fetches them, enabling data consumers to stay up to date with the dataset.

To understand the functioning of an LDES client, it is important to understand how LDESes are published on the Web. The Linked Data Fragments principle is utilised for publishing an LDES, meaning that the data is published in one or more fragments while meaningful semantic links are created between these fragments. Therefore, clients can follow these links to discover additional data. However, the critical aspect for the LDES client is the notion of mutable and immutable fragments. When publishing an LDES stream, a common configuration is to have a maximum number of members per fragment. Once a fragment surpasses this limit, it is regarded as immutable, and a ‘Cache-control: immutable’ cache header is added to the fragment to signify this. This information is crucial for the LDES client since it only needs to retrieve an immutable fragment once, while mutable fragments must be regularly pulled to identify new members.

More information about the replication and synchronisation of an LDES can be found on the specific documentation page of Digital Flanders.

LDES SERVER

The Linked Data Event Stream (LDES) server is a configurable component that can be used to ingest, store, and (re-)publish one or multiple Linked Data Event Stream(s). The open-source LDES server is built in the context of the VSDS project to exchange (Open) Data easily.

The server can be configured to meet the organisation’s specific needs. Functionalities include retention policy, fragmentation, deletion, snapshot creation, and pagination. These functionalities aim to efficiently manage and process large volumes of data optimising storage utilisation.

For more information about setting up the LDES Server, have a look at this page.

How can SEMIC support?

SEMIC can provide support for all Member States or organisations who would like to gain insights on how a LDES might be a solution for their problem.

- Implementation pilots: SEMIC provides the specifications on LDES as the main starting point for all interested parties. Our team of experts can also provide further support by collaborating with the public administrations technical teams, to ensure optimal implementation.

- Strategy and roadmap: SEMIC can support in identifying possible use cases in your organisation and help you in defining a set of concrete implementation steps to progressively and effectively adopt LDES.

- Knowledge sharing: SEMIC can provide knowledge sharing sessions in the form of webinars or even 1:1 roadshows in which key stakeholders are given the chance to exchange experiences and best practices:

Please feel free to reach out to emiel.dhondt@pwc.com if you have any questions on the topic on LDES.

SEMIC TV

Interested in the linked data event streams and how SEMIC contributes to it? Here is what Pieter Colpaert, Professor at IDLab (Ghent University) would like to share with you!